Unleashing the Power of High Performance Queue Services for PHP applications

In the modern era of PHP development, the efficient handling of asynchronous tasks and managing heavy loads has become a game-changer. Job queues have emerged as an essential component of PHP applications, addressing various challenges and improving overall efficiency. They allow us to process complex tasks without immediate return data, enabling efficient task management and enhancing application performance. As PHP applications continue to grow in complexity and scale, the need for a robust and high-performance queue service becomes increasingly evident. The right queue service can significantly boost the efficiency and performance of applications.

This article aims to highlight the crucial role of queue services in today’s PHP development landscape and how leveraging queue services implemented in high-performance languages can substantially enhance the performance of PHP applications.

Here are some tasks that can greatly benefit from the utilization of queue services:

- Sending bulk emails — Queue services can handle sending large volumes of emails in the background, allowing the main server to focus on other tasks and ensuring efficient delivery without performance issues.

- Processing complex tasks that don’t require immediate feedback — Queue services enable offloading and parallel processing of resource-intensive tasks like image processing or report generation, freeing up the main server for faster request handling.

- Handling resource-intensive operations in the background — Queue services delegate resource-intensive operations, such as image or video processing, to separate workers, keeping the main application responsive and preventing overload.

- Handling delayed executions and asynchronous processing — Queue services support delayed execution of tasks, useful for time-sensitive actions after a specific interval or event, like processing payment transactions with grace periods or verification processes.

- Balancing system load — Queue services distribute tasks across multiple consumers, preventing overload on a single server and improving system stability and responsiveness, especially during peak traffic.

Queue Service Implementation in PHP

PHP has been a popular choice for developers due to its simplicity, rich frameworks, extensive community support, and easy deployment methods. However, implementing infrastructure tools like queue services in PHP may not be the most optimal choice.

Here are some reasons why

- Not Optimized for Infrastructure Tools — PHP’s core strength lies in handling HTTP requests and generating HTML content for web development. Implementing infrastructure tools like queue services, which require long-running processes and efficient resource management, is not PHP’s primary focus.

- Interpreted Language — PHP is an interpreted language, which means the PHP interpreter reads and executes code line by line. While this makes PHP easy to use and deploy, it can slow down execution speed, especially for CPU-intensive tasks commonly found in queue services.

- Non-Optimized Code — PHP’s flexibility allows developers to write code in different ways to achieve the same result. However, this can lead to non-optimized code, which can impact performance, particularly in a queue service where performance is critical.

- Blocked I/O Operations — By default, PHP follows a synchronous or blocking I/O model. When a PHP application initiates an I/O operation, such as reading from a file or querying a database, the execution thread is blocked until the operation completes. This blocking nature can significantly hinder the performance and scalability of a queue service, which often needs to handle multiple I/O operations concurrently. Languages that support non-blocking or asynchronous I/O operations, like Go, are better suited for implementing high-performance queue services.

In PHP, communication with a queue broker is typically handled through sockets. However, PHP’s handling of sockets is less efficient compared to languages like Go. Efficient socket communication is crucial for tasks such as pushing and pulling messages from the queue, acknowledging message processing, and monitoring queue health. The overhead of managing

socket connections in PHP can lead to bottlenecks, affecting the overall performance and reliability of the queue service. This issue becomes more pronounced in distributed systems with a high volume of socket communication between different services. Languages that offer efficient, low-level control over network operations, such as Go, can handle socket communications more efficiently, resulting in a more performant and reliable queue service.

Harnessing the Power of Go

In contrast, Go, developed by Google, is designed to build simple, reliable, and speedy software. It comes with built-in concurrency mechanisms that make it easier to write programs that achieve more in less time and are less prone to crashing.

Go particularly excels in developing:

- Cloud-native applications

- Distributed networked services

- Fast and elegant command-line interfaces (CLIs)

- DevOps and SRE support tools

- Web development tools

- Stand-alone utilities

Given these remarkable strengths, Go has emerged as an exceptional choice for implementing services such as queue systems.

Here are the key reasons why Go is particularly well-suited for building queue services:

- Concurrency and Goroutines — Go provides built-in support for concurrency through the use of goroutines and channels. Goroutines are lightweight threads that can be effortlessly scheduled, allowing for the concurrent execution of tasks. This innate concurrency capability simplifies the handling of multiple tasks concurrently within a queue service, ultimately enhancing overall performance and responsiveness.

- Efficient Resource Management — Go’s runtime and garbage collector are meticulously designed to optimize resource utilization, making it ideal for long-running processes like queue services. It offers efficient memory management, minimizing overhead and maximizing performance.

-

Fast and Efficient — Go compiles directly to machine code, resulting in blazing-fast execution speed. This

attribute is particularly advantageous for handling CPU-intensive tasks commonly encountered in queue services. - Low-Level Socket Control — Go offers low-level control over network operations, allowing for efficient socket communication. This capability is crucial for queue services that need to handle a high volume of socket communication between different services.

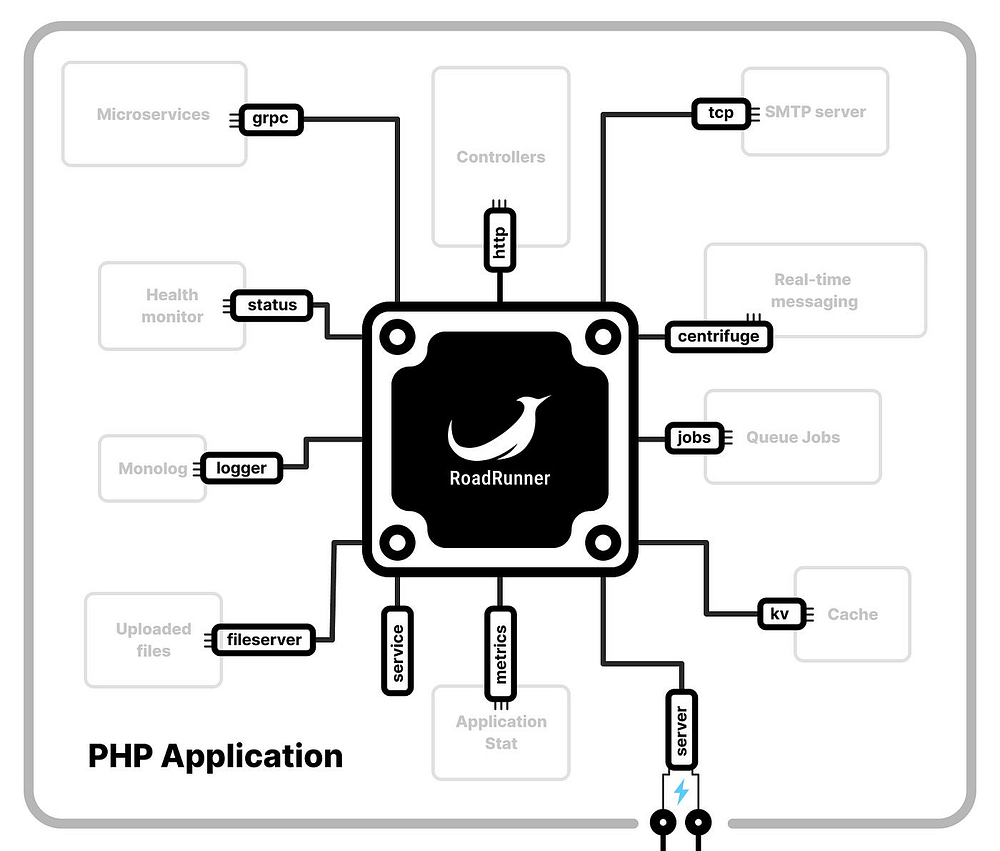

RoadRunner: Unleashing Go’s Power for PHP Queue Services

RoadRunner, in collaboration with the Spiral Framework, showcases the power of Go within PHP applications. The seamless integration between the two leverages the strengths of both languages, offering PHP developers a compelling alternative to traditional PHP frameworks. RoadRunner’s jobs plugin, a powerful Queue service, provides a simple configuration and

eliminates the complexities associated with setting up queue brokers or dealing with additional PHP extensions. This allows developers to focus on writing application logic without being burdened by intricate setup procedures. With RoadRunner, developers can harness the full array of Go’s benefits within their PHP projects, resulting in a smoother and more efficient development experience.

How it works

RoadRunner manages a collection of PHP processes, referred to as workers, and routes incoming requests from various plugins to these workers. This communication is done through the goridge protocol, enabling your PHP application to handles queued tasks received from queue brokers and sends them to a consumer PHP application for processing.

RoadRunner also provides an RPC interface for communication between the application and the server, which plays a significant role in enhancing the interaction between the server and PHP application. It provides the ability to dynamically manage queue pipelines from within the application, streamlining the execution of tasks and jobs.

RoadRunner keeps PHP workers alive between incoming requests. This means that you can completely eliminate bootload time (such as framework initialization) and significantly speed up a heavy application.

Exploring Queue Strategies

When working with RoadRunner, you have the flexibility to configure either a single instance or multiple instances for consuming and producing jobs in your queue pipelines.

Single RoadRunner Instance

For small projects where a distributed queue is not necessary, you can use a single RoadRunner instance to handle both job consumption and production. This approach is suitable for background job processing within the local environment. RoadRunner provides a convenient memory queue driver that efficiently handles a large number of tasks in a short time.

Here is an example configuration:

version: "3"

rpc:

listen: tcp://127.0.0.1:6001

server:

command: php consumer.php

relay: pipes

jobs:

consume: [ "local" ]

pipelines:

local:

driver: memory

config:

priority: 10

prefetch: 10

In this configuration, the jobs.consume directive specifies that the RoadRunner instance will consume tasks from the local pipeline using the memory queue driver.

Multiple RoadRunner Instances

For a distributed setup that involves a queue broker like RabbitMQ, you can configure multiple RoadRunner instances to handle job processing. In this scenario, some instances will act as producers, pushing jobs into the queue, while others will act as consumers, consuming tasks from the queue.

Here is an example configuration of producer

version: "3"

rpc:

listen: tcp://127.0.0.1:6001

server:

command: php consumer.php

relay: pipes

amqp:

addr: amqp://guest:guest@127.0.0.1:5672

jobs:

consume: [ ]

pipelines:

email:

driver: amqp

queue: email

...

default:

driver: amqp

queue: default

...

In this configuration:

-

amqp.addrspecifies the address of the RabbitMQ broker to connect to. -

jobs.consumeis an empty array, indicating that the RoadRunner instances will act as producers and only push tasks into the queue. -

pipelines.emailandpipelines.defaultdefine two pipelines that will use the AMQP queue driver. Each pipeline is associated with a specific queue name (emailanddefaultin this case).

To configure a RoadRunner instance as a consumer, you need to specify the pipelines it will consume in the jobs.consume section. Multiple consumer instances can be connected to the queue broker, and each instance can be configured to consume tasks from one or more pipelines. This enables parallel and scalable job processing.

Here’s an example configuration

jobs:

consume: [ "email" ]

pipelines:

email:

driver: amqp

queue: email

...

In this configuration, the consumer instances are set to consume tasks from the email pipeline.

One advantage of employing multiple consumer instances is the capability to scale the job processing capacity horizontally. By adding more consumer instances, you can handle a greater number of tasks simultaneously and achieve higher throughput in your queue system.

To scale consumer instances, you can replicate the consumer configuration for each new instance. Ensure that each instance connects to the same queue broker and consumes the desired pipelines. RoadRunner’s design facilitates the seamless integration of additional consumers without compromising the stability or performance of the job processing system.

By distributing the workload among multiple consumer instances, you can achieve load balancing and increase the overall efficiency of your queue processing. Each consumer instance can consume tasks from different pipelines or a combination of shared and dedicated pipelines, depending on your application’s requirements.

This approach allows you to tailor the configuration according to the specific needs of your system. For example, you can allocate dedicated consumer instances for high-priority pipelines or specific types of tasks, ensuring efficient processing for critical components. At the same time, you can have shared consumer instances that handle general tasks,

optimizing resource utilization and overall system performance.

Monitoring

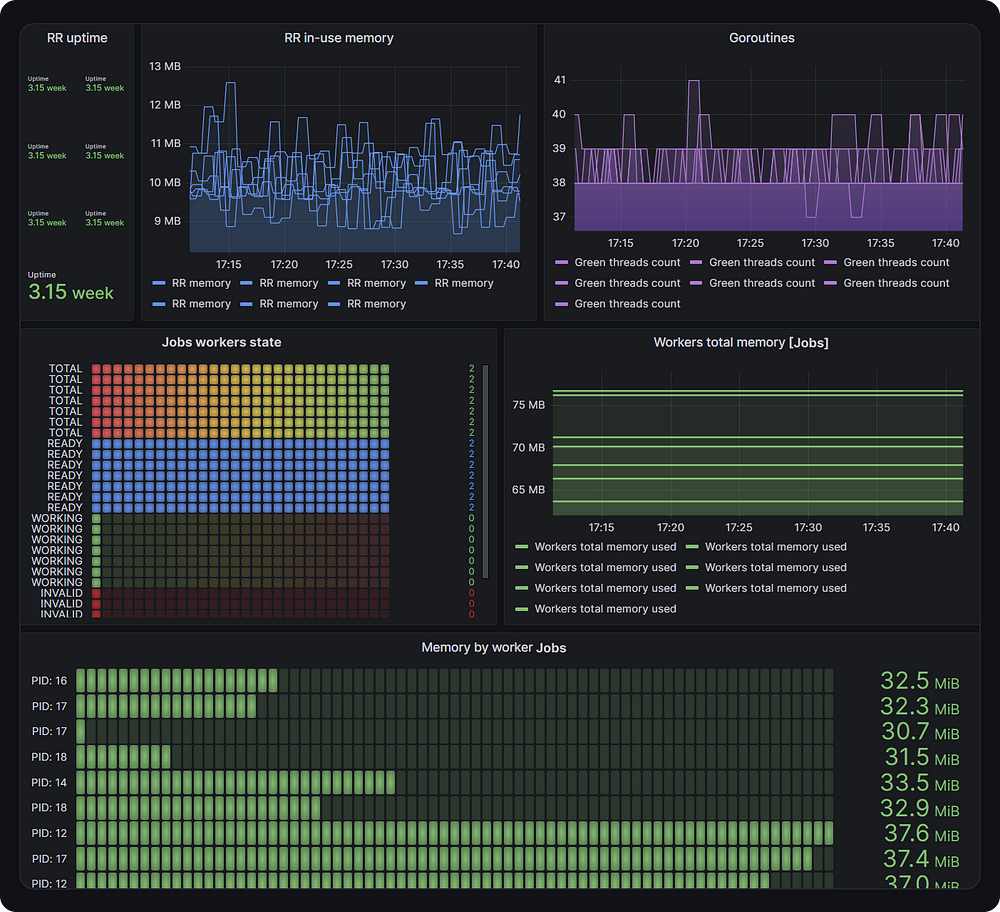

RoadRunner goes beyond just job processing and provides valuable metrics specifically designed for monitoring purposes. It integrates seamlessly with Prometheus. By leveraging metrics plugin, you can collect detailed metrics about job processing, worker performance, and resource utilization.

Grafana dashboard

Additionally, RoadRunner provides a pre-configured Grafana dashboard that allows you to visualize the collected metrics in a user-friendly and customizable way. With Grafana, you can create rich visualizations, set up alerts based on defined thresholds, and gain valuable insights into the performance and health of your job processing system.

Task payload

As a PHP developer, it’s crucial to pay attention to the payload size in your PHP framework and ensure that you use a proper serializer.

The size of the payload has a big impact on how efficiently the application communicates with the queue service. When the payload is large, it can cause several issues:

- Increased Network Latency — Larger payloads take longer to transmit over the network, increasing latency and slowing down the overall application performance.

- Higher Memory Usage — Larger payloads consume more memory, both in the queue service and in the PHP application. This increased memory usage can affect the performance of other parts of the application.

- Increased CPU Usage — Processing larger payloads can consume more CPU cycles, affecting the application’s performance and scalability.

- Reduced Throughput — The larger the payload, the fewer the number of messages that can be processed per unit of time, reducing the overall throughput of the queue service.

To address these drawbacks, it’s important to minimize the payload size when communicating between the PHP application and the queue service. One effective approach is to use a compact serialization format like Protocol Buffers (protobuf). Compared to other serialization formats, protobuf offers smaller payload sizes, resulting in improved performance and efficiency.

The following table compares the payload sizes for different serialization formats:

| Data Type | Size in Bytes |

|---|---|

| Protobuf object | 193 |

| Ig Binary array | 348 |

| JSON array | 430 |

| Ig Binary object | 482 |

| Native serializer array | 635 |

| Native serializer object | 848 |

| SerializableClosure array | 961 |

| SerializableClosure object | 1174 |

| SerializableClosure array w/secret | 1111 |

| SerializableClosure object w/secret | 1325 |

Based on this comparison, it’s evident that using protobuf serialization offers significant efficiency and performance benefits over other serialization formats. By minimizing the payload size, protobuf serialization enables faster network communication, reduces memory and CPU usage, and improves the overall throughput of the queue service. This, in turn, leads to a more efficient and scalable application.

Conclusion

RoadRunner is an awesome queue service for PHP applications. It works great with PHP Frameworks and brings the incredible power and performance of Go to your projects. With RoadRunner, you can efficiently handle jobs, whether you’re using a single instance or a distributed setup.

RoadRunner acts like a supercharged processor for PHP apps, making them faster, more responsive, and robust.

In a nutshell, RoadRunner helps PHP developers overcome performance limitations. It’s super fast, flexible, and user-friendly, making it a fantastic choice for powering your PHP applications.

Want more? Unlock the Power of Advanced Workflow Orchestration

Here is also an awesome workflow management solution called Temporal IO. Now, if you’re familiar with queue services like RoadRunner, you’re in for a treat because Temporal IO takes workflow management to a whole new level. It’s like having superpowers for handling complex workflows in a simple and elegant manner. Let me show you why it’s the bee’s knees.

In the world of PHP development, we often find ourselves juggling various tasks and processes that need to be executed in a specific order. That’s where Temporal shines. It allows us to write expressive and powerful workflows in a way that’s easy to understand and maintain.

Let’s dive into an example that showcases the beauty of Temporal

Imagine you have a task of handling user subscriptions on a monthly basis. With Temporal, it becomes a straightforward process. Here’s a simple example to illustrate it:

<?php

/**

* This file is part of Temporal package.

*

* For the full copyright and license information, please view the LICENSE

* file that was distributed with this source code.

*/

declare(strict_types=1);

namespace Temporal\Samples\Subscription;

use Carbon\CarbonInterval;

use Temporal\Activity\ActivityOptions;

use Temporal\Exception\Failure\CanceledFailure;

use Temporal\Workflow;

/**

* Demonstrates a long-running process to represent a user subscription workflow.

*/

class SubscriptionWorkflow implements SubscriptionWorkflowInterface

{

private $account;

// Workflow logic goes here...

public function subscribe(string $userID) {

yield $this->account->sendWelcomeEmail($userID);

try {

$trialPeriod = true;

while (true) {

// Lower the period duration to observe workflow behavior

yield Workflow::timer(CarbonInterval::days(30));

if ($trialPeriod) {

yield $this->account->sendEndOfTrialEmail($userID);

$trialPeriod = false;

continue;

}

yield $this->account->chargeMonthlyFee($userID);

yield $this->account->sendMonthlyChargeEmail($userID);

}

} catch (CanceledFailure $e) {

yield Workflow::asyncDetached(

function () use ($userID) {

yield $this->account->processSubscriptionCancellation($userID);

yield $this->account->sendSorryToSeeYouGoEmail($userID);

}

);

}

}

}

In this example, the subscribe method represents the workflow logic for managing monthly subscriptions. The magic lies in the Workflow::timer function, which allows you to schedule a specific duration for each iteration of the loop.

By setting the timer to CarbonInterval::months(1), you can ensure that the subscription tasks are executed every month. Temporal takes care of the scheduling and coordination, freeing you from the hassle of managing it manually.

Moreover, Temporal provides built-in fault tolerance and scalability. If an exception occurs, such as a CanceledFailure indicating a subscription cancellation, you can handle it gracefully within the workflow.

With Temporal, managing complex periodic workflows like monthly subscriptions becomes a breeze. The framework handles the scheduling, retries, and fault tolerance, allowing you to focus on the essential aspects of your application.